How many Kubernetes nodes should be in a cluster?

- The Tech Platform

- Dec 15, 2020

- 4 min read

Updated: Feb 15, 2024

Deciding how many nodes your Kubernetes cluster needs isn't the same for every situation. It depends on what your work needs are.

How many Kubernetes nodes should be in a cluster?

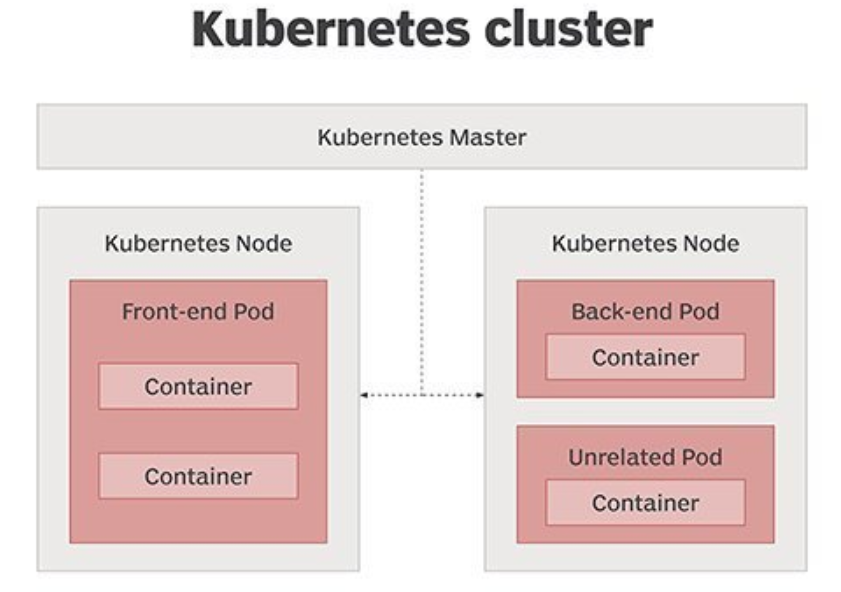

Kubernetes clusters are the backbone of modern containerized infrastructure, facilitating the deployment, scaling, and management of containerized applications. However, determining the optimal number of nodes for a Kubernetes cluster is a critical decision that can significantly impact performance, availability, and cost. In this article, we'll explore the factors that influence node count and provide guidelines for making informed decisions when configuring Kubernetes clusters.

Factors Influencing Node Count

Workload Performance Considerations:

When considering the number of nodes in a Kubernetes cluster, it's essential to evaluate workload performance requirements.

Each node contributes resources such as CPU, memory, and storage to the cluster.

Understanding the resource needs of your workloads is crucial for ensuring optimal performance and scalability.

High Availability Requirements:

High availability is another crucial factor in determining node count. A Kubernetes cluster with a sufficient number of nodes ensures redundancy and fault tolerance, minimizing the risk of service disruptions due to node failures.

Balancing node count with availability requirements is essential for maintaining cluster reliability.

Cost-Effectiveness and Resource Utilization:

While scalability and high availability are important, it's equally essential to consider cost-effectiveness and resource utilization.

Adding more nodes to a cluster increases infrastructure costs, so it's essential to strike a balance between performance, availability, and cost.

Workload performance

Each node in a Kubernetes cluster contributes resources to support containerized workloads. Nodes may vary in terms of CPU, memory, and other hardware specifications. Understanding the resource allocation of each node is essential for optimizing workload performance and scalability.

Importance of Resource Allocation and Scalability

Proper resource allocation is crucial for ensuring that workloads have access to the resources they need to operate efficiently. Kubernetes clusters should be scalable to accommodate growing workloads while maintaining performance and reliability.

Strategies for Optimizing Workload Performance

Implementing strategies such as horizontal pod autoscaling and vertical pod autoscaling can help optimize workload performance in Kubernetes clusters. These mechanisms automatically adjust resource allocation based on workload demand, ensuring optimal performance without overprovisioning resources.

High availability

The number of nodes in a Kubernetes cluster directly affects its resilience and fault tolerance. A cluster with an insufficient number of nodes may be more susceptible to service disruptions due to node failures.

Considerations for Fault Tolerance and Node Redundancy

Maintaining node redundancy is essential for ensuring high availability in Kubernetes clusters. By distributing workloads across multiple nodes, organizations can minimize the impact of node failures on cluster operations.

Guidelines for Ensuring High Availability in Kubernetes Clusters

As a general guideline, Kubernetes clusters should have a minimum of three master nodes and a sufficient number of worker nodes to accommodate workload requirements. Additionally, implementing strategies such as pod anti-affinity and node taints and tolerations can further enhance cluster resilience.

Physical vs. virtual machines

Organizations have the option to deploy Kubernetes nodes on dedicated physical servers, virtual machines, or a combination of both. Each approach has its advantages and disadvantages in terms of reliability, scalability, and cost.

Pros and Cons of Each Approach in Terms of Reliability and Cost

Dedicated physical servers offer greater reliability and performance but may be more expensive to maintain. Virtual machines, on the other hand, provide flexibility and cost savings but may introduce additional layers of complexity and potential points of failure.

Recommendations for Choosing the Right Infrastructure Mix

Finding the right balance between physical and virtual machines depends on factors such as workload requirements, budget constraints, and organizational preferences. In many cases, a hybrid approach that leverages both physical and virtual infrastructure offers the best combination of reliability and cost-effectiveness.

Best Practices and Recommendations

When determining the number of nodes for a Kubernetes cluster, organizations should consider factors such as workload resource requirements, availability goals, and cost constraints. Conducting thorough performance testing and capacity planning can help identify the optimal node count for specific use cases.

Strategies for Achieving Optimal Performance, Availability, and Cost-Effectiveness

Implementing best practices such as workload optimization, node scaling, and infrastructure automation can help organizations achieve optimal performance, availability, and cost-effectiveness in Kubernetes clusters. Regular monitoring and performance tuning are also essential for maintaining cluster health and efficiency.

Real-World Examples and Case Studies

Examining real-world examples and case studies of organizations that have successfully optimized their Kubernetes clusters can provide valuable insights and best practices for other organizations to follow. Learning from others' experiences can help organizations avoid common pitfalls and make informed decisions when designing and managing Kubernetes clusters.

Conclusion

Determining the optimal number of nodes for a Kubernetes cluster is a complex process that requires careful consideration of workload requirements, availability goals, and cost constraints. By following the guidelines and best practices outlined in this article, organizations can design and deploy Kubernetes clusters that meet their performance, availability, and cost objectives. With proper planning, monitoring, and optimization, Kubernetes clusters can serve as reliable and scalable platforms for deploying containerized applications in production environments.

Comments