The Standard Template Library (STL) is a (language built in) library for C++. This means, that C++ Standard defines everything in STL and compiler writers have to implement them, so that we, lesser mortals, can simply include and use them. STL consists of 4 major parts:

containers — data structures to store objects of the same type, with basic functionality (insertion, removal, lookup)

algorithms — set of helpful and reliable algorithms

iterators — objects mostly used to apply make algorithms work on containers, also to iterate and manipulate the data in the containers

functors — objects, that behave like functions, and are used by algorithms

We will now focus on the containers only, but will have to deal with others too.

As mentioned, containers are classes, objects of which are used to store other objects of the same type. There are not many containers in STL, so let’s meet them real quick.

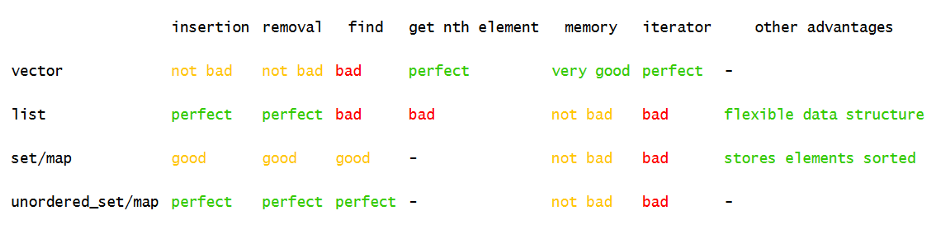

The most famous ones of them are vector, list, set, map, unordered_set and unordered_map. They are all containers, so generally they do the same thing. They provide interfaces to insert, remove and find elements in them, but these they do in a very different way, with different complexities and using different amount of memory.

The chosen one

So you are coding and suddenly you are in need of a container. Which one to choose? There are so many of them (master Skywalker), what are we going to do?

It’s not hard to understand that each and every one of these containers is really good at something (so that it’s a proud member of STL containers), but also has some flaws (otherwise it would be the one and only).

Let’s see which are the strengths and weaknesses of each. We will examine the following factors:

insertion

removal

find

get nth element

memory usage

iterator category

Container features

Now let’s get deeper and see why each container is so good at something and so bad at other things. For those, who know these, I’d suggest to jump to the next section, “Let the hacking begin”.

If the sequence of the elements in the container matters, we are limited to use only sequence containers, which are vector and list. To understand why exactly something is easy or hard for a specific container to do, we need to understand what is the underlying data structure (where and how the elements are stored).

vector

The underlying data structure of a `vector` is an array. Most of the time the size of the underlying array is bigger than the size of elements in it, so you have more space than you need. But this gives the ability to quickly insert an element to the end or remove the last one, just by keeping track of the number of elements. Of course, from time to time the size of the array may not be enough, so a new bigger one will be allocated, the older elements will be copied to the new one, and the old one will be destroyed. With this in mind, let’s see how good `vector` is of each factor mentioned above.

For insertion it provides two interfaces. First is the push_back function, which adds an element in the end of the container. vector can do this either really quickly, which is almost always, or really heavily, when it’s time to allocate a new array. The professional term for complexity is “amortized const time (O(1))”. It’s worth noting that everything is not that bad as sounds. In fact, if we insert 1 mln elements, and if the growth factor is 2 (every time the new array is twice as big as the old one), this thing will happen only for 19 insertions. Besides, vector provides a functionality to change the size of the underlying array at any point, so that if we have any clue about the number of the elements, we can reduce the occurrence of this process significantly. To sum up, vector is really good at adding elements in the end.

But it also provides a functionality to insert an element anywhere in the middle, with insert functions. In this case, all the elements coming after the desired position have to shift one position to the right, to make a space for the new one. In the worst case, which is inserting the new element in the beginning, all elements have to be shifted. The complexity is linear on the size of the container (O(N)).

The complexity and interface for removal is pretty much the same as for insertion. There is a pop_back function, which works in O(1), but this time, a real O(1) (no new allocation issues in case of removal). The erase function, on the other hand, shifts everything after the removed element back to the left. So its complexity is O(N) again.

The complexity of finding an element is linear (O(N)) too, obviously. No other way to do this, than simply checking every element, until we succeed (or not).

Getting the nth element, this is where vector wins it all. It’s very quick and of O(1) complexity.

Memory usage of it is also a pro. The underlying data structure is an array, so it could not be any better (ignoring the unused part of it, which can be taken care of easily).

The iterators of vector are of “random access” category. Now, this is the best thing about it, the only thing that other containers wish they had. This feature lets a lot of algorithms work way faster than with other iterators. Some of them ( std::sort ) even work only for random access iterators.

It’s worth to mention about the deque container, which has all the good features as the vector, and even provides push/pop_front functionality.

list

list, a.k.a. doubly linked list, is also a sequential container. Basically, it’s a collection of nodes, connected one to another. Each node contains a single element and two pointers to previous and next nodes. The list object itself keeps two pointers to the first and last node (for some implementations the size too).

For insertion and removal it provides the same functionality as vector, plus push_front and pop_front functions. Unlike vector, insertion in list is always at a very low cost, const time in fact. And it’s easy to understand: the new node can be created anywhere in the memory, it just needs to point its pointers to the new neighbor nodes and “ask” them to do the same. Or, in case of removal, it just needs to introduce his two neighbors to each other, before it’s gone.

You might think, why doesn’t vector have push/pop_front functions, if it’s available there somehow else. I mean, you can get an iterator to the first element with begin() and then insert/remove the first element with that iterator, right? That’s correct, but it’s all done intentionally. Just keep this in mind, we’ll discuss this later.

Finding an element in list has the same complexity and for the same reason, as in vector: O(N).

Getting the nth element, now that’s where the vector is winning. In case of the vector, after a simple calculation you know exactly at which address the nth element is, but for the list you have no clue. You just need to go one by one, from the beginning to end or otherwise.=

Whether the list is good from the memory usage perspective, depends on the object size of the elements. Remember, each element is stored in a node, with two pointers. So, if the size of the object is big, having two more pointers is not a big deal, but if it’s small, like if the elements are pointers, you are using 3 times more memory than you would do with a vector.

The iterators of list are of “bidirectional” category. This is not good. THis means that lot’s of algorithms will work slower, and some will not be available, for example the sort algorithm. However, list provides it’s own sort function, which has the same complexity as the other one.

It’s worth mentioning that list takes advantage of it’s underlying data structure and provides some other efficient and helpful functions.

set/map

If you don’t care about the insertion order, you can benefit from associative containers. The underlying abstract data structure of set and map is binary search tree. Most compilers implement it as a red-black tree (RB tree).

Before we go ahead and examine all the factors for these containers, let’s see what they can be used for. set is for storing unique elements in sorted order. This (automatic sorting), perhaps, is the best and most useful feature of it. map is pretty much the same, but instead of elements, it stores key-value pairs (sorting and everything else is based on the key).

For simplicity let’s assume that the underlying binary search tree is somehow always balance. This we can do, because, in fact, most compilers implement a so called red-black tree. Long story short, it is a binary search tree, which keeps itself balanced with a very low cost.

A binary tree is balanced if for any node the difference between the heights of the left and the right subtrees is at most 1.

A binary tree is balanced if the difference of the distance to the root between any two leaves is at most 1. The insertion, removal and finding an element have the same logarithmic cost (O(logN)). This, because to find an element we have to go to the bottom of the tree (at maximum). Starting from the root, we do a comparison at each level and go down by one, until we find what we are looking for, or to the end (leaves), if it doesn’t exist. But how tall is this tree? As each node has two children, each level can have twice as much nodes as the last one, i.e. at the first level we have 1 element, in the next 2, then 4, 8, 16 and so on. So, if we have a tree with L fully loaded levels, the total number of elements will be:

1 + 2 + ... + 2^L = 2^(L + 1) — 1Remember, the question is, if we know the number of nodes, and also, know that it’s balanced, how many levels are there? Mathematically speaking, for a given number of nodes (N) we have to find the number of layers (L), so that:

2^(L+1)-1 >= N and 2^L <= N

L >= log(N+1)-1 and L <= log(N)Generally speaking, there are log(N) levels in a balanced binary tree of N nodes.

As stated above, for associative containers it’s impossible to get the nth element.

As for memory, these containers use about as much as the list. This, because each value is stored in a tree node, and each tree node has pointers to its left and right children, and for some implementations they may even have pointers to the parents.

The iterators of these containers are bidirectional. As discussed for the list, this iterators are not the best in the business, but they still are something.

There are also multiset and multimap containers, which are basically the same, with one difference: keys are not unique for the latter.

unordered_set/unordered_map

These containers joined the STL container family pretty late, since C++11. The abstract data structure for them is the well known hash table. The best thing about it is that everything it does, it does almost instantly. You may have realized that so far each container is good at something, but also really bad at something else. It’s not the case for these containers. The insertion, find and removal of elements takes const time (O(1)). But… but… sir… if they’re so good, how come they didn’t make others obsolete?

Because they are not perfect. They are associative, which means, unlike vector and list they don’t store data in the insertion order, and neither sorted. Besides, if the key type is not a standard one, you have to think of a hashing function, on which the overall performance depends. To understand how everything is O(1) for them, let’s see how a hash table is implemented.

Imagine you need to store key-value pairs, keys of which are integers of a specific range. Instead of using a map you could simply use a vector. This would give an advantage to get the value from the key instantly. What if I told you, there’s a trick to simulate this? So, your keys can be of any type, but if you have a so called hash function to generate an integer for a key, you can have the elements stored in a vector and access them instantly. It’s something like this:

uint32_t hash(const KeyT& key)

{

// generate and return some value

}It takes any value of type KeyT and returns a 4 byte unsigned integer. In a perfect world, we could have a perfect hash function, so that if we had, let’s say, 100 values of KeyT, we could have an array of size 100, and the modulo of 100 ( mod 100 ) of these hash values would be different, and we could store, remove and find instantly. However, the reality is a little far from this, so there are some workarounds. One of the approaches (perhaps the best one) is to have an array of so called buckets, which are nothing more than a list of elements. So, if you want to insert, remove or find an element, you hash it, use this hash value as an index to (instantly) find the bucket, then add, remove or find in this list. Of course, finding in list takes linear time, but not if it’s size is always small. The idea is to always keep track of the load_factor, which is the average size of a bucket, i.e. size / bucket_count, and when this amount is bigger than some constant max_load_factor, a rehashing occurs. It’s when we add a new bucket. It sounds easy, just add a new bucket, but all the existing elements have to be redistributed. That is because, if we previously had 10 buckets, and had to insert an element, the hash value of which is 149983, we stored it in the 3rd bucket ( 149983 mod 10 = 3 ). And now, if we just add a new 11th bucket, and look for this element, we will go and look for in the 9th bucket ( 149983 mod 11 = 9 ), where we won’t find it. This scenario is pretty much like the one where vector needs to grow its capacity. Of course, like std::vector, std::unordered_set and std::unordered_map provide a functionality to escape this heavy rehashes if you know approximately how many elements will you be storing.

The performance of a hash table very much depends on the hash function. The most important and, perhaps, most interesting part of using these containers is to think of a good hash function. There are lot’s of great articles that explain how to come up with a good hash function. The main goal there is to reduce the probability of a key collision.

Key collision is when two distinct values have the same hash value. This means, they will be stored in the same bucket. So if we have a bad hash function which allows key collision very often, or in the worst case, returns the same value for every input, our hash table will turn into a list (all values will have to be stored in the same bucket). Of course it’s not always possible to escape key collisions. First of all, even if the hash values are different, their “mod”s can be the same. Besides, if your hash value type is a 32 bit unsigned integer, meaning that it can have 2³² different values, your input can be of such a type, that there are way more possible inputs. For example, if the input is of type string and their lengths are 10, there can be 2⁸⁰ different inputs, which means, that it’s impossible to not have a key collision. So our problem is to make key collision rare. One of the techniques is to make the output uniformly distributed in the codomain ( [0, 2³²-1] ). Another technique is to use all the parts of the input. For example, if your inputs are 10 length strings, and if you use only the first four characters, there is a big chance, that your keys will collide often (e.g. inputs are file paths with the same beginning). On the other hand, this will make hash function slower.

In the end, the choice of a hashing algorithm very much depends on the situation and inputs.

STL provides std::hash function for all basic types, which are very well designed, so most of the time you don’t even need to worry about it, or you can construct your own hash function based on them.

So, with these in mind let’s go ahead and see how these containers behave.

Insertion for them is amortized const time: almost always it is very fast, it just needs to hash the value and insert in the corresponding bucket. It’s only a pain, when a rehashing is needed.

Removal is precisely const time: it’s the same as removing from a list.

Find complexity is also const time, but remember, that const time doesn’t mean fast. How fast it is depends on the max_load_factor. All the famous compilers have taken care of a good max_load_factor, so that find is really fast.

Of course these are associative containers, so no point to talk about the nth element.

Memory usage of these containers is too much. It stores a vector of lists. I think the rest is obvious.

The iterators of it are bidirectional ones, the same story as with the map and set.

Aren’t these enough?

So we reviewed all the containers that STL provides, their pros and cons, and the bottom line was that there is no single best container, each one is good for a specific scenario, and there are so many of them, that most of the time you can just use one of them, not create your own. But, of course, people who came up with these containers, or the ones who implemented them, don’t know what you will need them for, how unusual and uncommon your scenario might be. Let’s consider the container I implemented: prefix_tree. It’s a substitute to the std::map<std::string, T> for any type T. Of course, I’m not the one who came up with this great idea, I just implemented it. So what’s the scenario in which the prefix_tree is better than existing containers?

Without going too deep into the data structure under the hood, let’s just say the complexity of the find for it depends on the length of the string we are looking for, whereas, remember, for map (binary search tree) it depends on the number of elements in it. This means, that asymptotically (after some N number of elements) find will be faster on prefix_tree.

Depending the implementation it can also be the winner from memory usage point of view. My first version of implementation was doing this, so if you had a lot of elements with the same beginning, it would use significantly less memory. But when I decided to make it STL compatible, specifically when I tried to implement reverse_iterator based on already implemented iterator, it turned out that my iterator did not meet the Standard requirements and I had to change the whole data structure for the container to meet them, and lose this advantage. So, before you go ahead and write your own STL compatible container, you should ask yourself, is it worth making that container STL compatible?

The last paragraph of this section is especially for professionals. I want to describe the problem I faced, mentioned above, with the hope that someone can come up with a better solution.

So, the problem is that in my initial implementation each node has a character and a pointer to a mapped type. If this pointer is not null, we can go up the tree and collect all the characters, resulting a string, which is mapped to the value our pointer points to. But the value_type of my container is defined as std::pair<const key_type, mapped_type>, and the access functions of the iterator had to return pointer or reference to an object of this type. At first, I solved the issue by making a proxy object of this type as a member of the iterator. It should have the iterator’s lifetime, the iterator had a pointer to the node, and when it changed to point to another node, it calculated the key all over again and updated the proxy member. It was all fine until I added reverse_iterator. STL provides an adaptor class std::reverse_iterator, which you can use to make reverse_iterator based on your properly defined iterator. I thought that mine is properly defined, but it was not. I found about it thanks to the unit tests, which failed when dereferencing a reverse_iterator. In GCC’s implementation of std::reverse_iterator in operator* it creates a temporary iterator, decrements it and dereferences, returning the result. In this case this pointer pointed to the proxy object inside the temporarily created iterator, which already got out of scope and destructed. While digging in the sources of GCC I found out that once this was considered a bug in implementation. This bug is not fixed yet, it’s considered invalid and the implementation is valid, because the standard (24.2.5 [forward.iterators]/6) clearly states that: If a and b are both dereferenceable, then a == b if and only if *a and *b are bound to the same object. This was the problem with my iterators. Every iterator had its own object to be bound to, even when pointing to the same node. To solve this I simply changed the structure of the node, so that now it stores a proper value_type, i.e. std::pair<const key_type, mapped_type>.

Let the hacking begin

We are going to implement an associative STL compatible container, which has to be a substitute of the existing map container. Actually, this is a good news, because we can simply look at its interface and repeat everything. Not only we can, but we should. If our container is claiming to be substitute, it’s expected to have the same interface.

When it comes to writing the code, the “up to bottom” approach is easier for me. That is, I start with the interface, define all functions, and implement them based on another layer of implementation. In this case I kind of used the “pimpl” technique, except my goal was not to hide the implementation, so it’s not a pointer to the implementation, just the implementation. So, I suppose I have the core implementation of this container, which doesn’t know about the outer abstractions and has the simplest possible interface (insert, find, remove). The main class has a member of it and refers to it.

Now, let’s take a look at the map interface and make our one as close to that as possible. Here is it’s definition:

template< class Key,

class T,

class Compare = std::less<Key>,

class Allocator = std::allocator<std::pair<const Key, T> >> class map;Of course it’s a template class with four arguments. Our container will have almost the same parameters.

template <typename StringT,

typename MappedT,

typename AllocatorT = std::allocator<MappedT> >

class prefix_treeI’ve made some changes. First, the Key is renamed to StringT, just to contain an additional hint, that the key type of this container has to be a string type. It’s a bit easy to say but hard to understand, what is a string type. Basically, it is required to be an STL compatible sequence container. The Compare parameter is also gone, because we don’t need it now. For now, it’s enough that the characters of StringT have std::hash defined for them (which is mostly the case). Keep in mind that mostly std::map is used with two template parameters, key and value types. Now we support the same interface. Moving on.

Another important part of STL containers is the public definitions. The ones of std::map can be found here. In our case they are:

public:

using key_type = StringT;

using mapped_type = MappedT;

using value_type = std::pair<const key_type, mapped_type>;

using char_type = typename key_type::value_type;

using size_type = std::size_t;

using allocator_type = AllocatorT;

using reference = mapped_type&;

using const_reference = const mapped_type&;

using pointer = typename std::allocator_traits<allocator_type>::pointer;

using const_pointer = typename std::allocator_traits<allocator_type>::const_pointer;

using iterator = prefix_tree_iterator<key_type, char_type, mapped_type>;

using const_iterator = prefix_tree_const_iterator<key_type, char_type, mapped_type>;

using reverse_iterator = std::reverse_iterator<iterator>;

using const_reverse_iterator =std::reverse_iterator<const_iterator>;These definitions are not only helpful, so that will make your coding easy and the code readable, but also are important. They can and will be used, not only by the STL, but also other users. As a matter of fact, we have already used those definitions of other classes in this code. See the lines 5, 10, 11. We expect, that the key_type, which is the template parameter StringT, will have a public type definition named value_type, and the most famous string, the std::basic_string does have that.

The definitions are pretty much the same as in map, with one addition, char_type. Actually it’s there just in case, it doesn’t even have a real usage. It’s more of a philosophical question, whether or not a container should have this or that functionality or definition. We will get to this later. I added char_type, because I thought this type is a crucial part of this container, and it would be good to have a separate definition of it, thus highlighting it’s importance.

Next up, the constructors, assignment operators and destructor. Those of std ::map are here. In our case, it will be:

public:

/* Constructors, assignment and destructor */

explicitprefix_tree(const allocator_type& a = allocator_type());

prefix_tree(const prefix_tree&) = default;

prefix_tree(prefix_tree&&) = default;

prefix_tree(std::initializer_list<value_type> il, const allocator_type& a = allocator_type());

template <typename FwdIterT>

prefix_tree(FwdIterT first, FwdIterT last,

const allocator_type& a = allocator_type());

prefix_tree& operator= (const prefix_tree&) = default;

prefix_tree& operator= (prefix_tree&&) = default;

~prefix_tree() = default;The important thing to keep in mind is that every STL container has a default constructor (mostly with an optional allocator parameter), a constructor from std::initializer_list (for newer standards) and a constructor with two iterators. It should also have proper copy (and move) constructor and assignment operator, and of course a destructor. Sure there may be other constructors too, like vector and list have fill

constructors, but these are the basic ones.

Then we have the selectors and mutators.

/* Selectors */

const_iterator find(const key_type& key) const;

size_type size() const;

size_type max_size() const;

bool empty() const;

reference at(const key_type& key);

const_reference at(const key_type& key) const;

/* Mutators */

iterator find (const key_type& key);

std::pair<iterator, bool> insert(const key_type& key, const

mapped_type& value);

std::pair<iterator, bool> insert(const value_type& value);

iterator insert(const_iterator hint, const value_type& value);

reference operator[] (const key_type& key);

void erase(const key_type& key);

iterator erase(iterator pos);

void clear();You can see here, that std::map has more functions, especially ones which are available since C++17. Maybe later I will add those for perfect matching, but now, the important ones are here. This is the part when you let your imagination and creativity free. You have to provide some basic interface, but also you are free to add anything. Obviously, by basic functionality we mean functions to add, remove and find an element (this is a container after all). You should be careful with the names and the signatures.

As for insertion, it depends on the type of container (whether it’s a sequence one or not). If it is a sequence container, you should consider the following functions:

push_back

push_front

pop_back

pop_front

This is important, because STL adaptors, like std::stack and std::queue lean on these functions. Keep in mind, that it’s not necessary to have them all. vector for example has only two of them (the “back” ones).

Philosophy time. If you have enough experience with STL, you already know that STL containers don’t provide all the functions in the world, so that you can simply call them. They only provide functions which will work relatively fast. This is a bit hard, but important to understand. Let’s take QVector container from Qt library. It’s a substitute for std::vector, and provides push_front function, and the official documentation claims that this will take linear time. The fact that std::vector doesn’t provide a separate function for this, doesn’t mean you can’t do the same for it. You can use the insert function with an iterator returned from begin(). In other words:

std::vector<int> sv;

QVector<int> qv;

//...

sv.insert(sv.begin(), 42);

qv.push_front(42);Yes, you can do the same with std::vector, but with a bit more effort, and this is done intentionally. The idea is that if you simply call a function on a class, you should expect it to work fast enough, and if you want to do something else with this container, which is not that easy, it shouldn’t be that easy for you too.

But this was not our case, our container is not a sequence one. We should have insert function(s). I have 3 of them. Let’s discuss them one by one.

The first one is on 11th line, it has nothing to do with STL compatibility, std::map doesn’t have one with this signature, just added by me. The second one, on line 12, is the insertion function signature for associative containers, it takes an element of value_type and inserts in it’s right place. The return value (a pair of iterator and boolean) of it is also the same as for std::map and std::unordered_map. The most interesting is the third one. You can see, it takes an iterator and a value as an input and returns an iterator. All STL containers have insert function with this signature. This is done to support the idea of container independent code. Scott Meyers has a great item in his legendary book “Effective STL” about this idea, it’s definitely worth checking out. The idea is to write such a code, that can work for any container, so that if you change one type definition, everything else will still work. As Meyers states, this idea is a nice, but dangerous one. You shouldn’t always try to make your code container independent, especially when the chances of it to be useful are practically 0. However, once I needed this feature of STL containers and it was really nice, that they have taken care of this. Take a look at this code of mine.

template <typename ContainerT>

ByteArray toByteArray(const ContainerT& container, typename std::enable_if<Generic::is_stl_container<ContainerT>::value>::type* /*= nullptr*/)

{

ByteArray result;

result += toByteArray(static_cast<uint32_t>(container.size()));

for (constauto& item : container)

{

result += toByteArray(item);

}

return result;

}

template <typename ContainerT>

int fromByteArray(const Byte* data, ContainerT& container, typename std::enable_if<Generic::is_stl_container<ContainerT>::value>::type* /*= nullptr*/)

{

int bytesRead = 0;

uint32_t containerSize = 0;

bytesRead += fromByteArray(data, containerSize);

for (uint32_t i = 0; i < containerSize; ++i)

{

typename ContainerT::value_type value;

bytesRead += fromByteArray(data + bytesRead, value);

container.insert(container.end(), value);

}

return bytesRead;

}The case is, I have written two template functions and I want them to work for any container. Take a look at the lines 4, 6, 19 and (especially) 21. So, thanks to the fact that all STL containers provide the same basic interface, this code works for me. All of them have size member function, begin and end functions and proper iterators, value_type public type definition, and of course insert functions, which takes iterator and value, returns an iterator. It’s worth mentioning that for sequence containers the iterator, that is the input of this insert function, affects on where the new element is inserted, but it’s not the case for associative containers. That’s why the name of this parameter is hint for map, and most implementations don’t even use this parameter, neither does my prefix_tree. It’s just there to meet the generic interface.

The story is pretty much the same about removal. Here we have two erase functions, the second of which (line 16) takes an iterator as an input and returns an iterator. This version is the generic functionality of removal.

As for the other functions, size, max_size, empty and clear are important. All containers have these functions. The first three of them are expected to have a const time complexity, and the last one linear.

Back to the STL philosophy, there have been interesting debates and discussions about size member function for std::list. As discussed above, STL containers strongly kept the idea of providing simple functions only when they are fast. If we are talking about the size function, by fast we mean in const time. Intuitively seems like it’s not a problem for a container to somehow get it’s size or keep track of it. Obviously, in case of list the solution is to keep track of the size, increment and decrement in case of insertions and removals correspondingly. Turns out it is a problem for a list, and the problem occurs with another (not less important) member function: splice. This functions transfers elements from one list to another. Only the list has this function, because it’s data structure gives the ability to do it very fast, in const time. There are several overloads of this function, but one of them ruins it all, the one that takes two iterators of another list, determining the range of elements to be moved from it. This could be done in const time, and this should be done in const time, but then we lose the track of size. We can’t know how many elements are there in that range. So here we are on the fence. Either we keep track of the size, so that size will have const time complexity, but make the splice have linear complexity, so that we can keep track of the size, or we leave splice run effectively in const time and don’t keep track of the size, just count it every time, making size have linear complexity. There were other options too, to let one of these functions go, but both of them were too important to be left out. I don’t know how the elders of the C++ discussed this (I wish I were there to know), but I know what they came up with. Before C++11 the standard stated that the size should be const time (so it’s not required), and the splice has to be const time. Since C++11 the standard required size to have const time complexity, and splice was allowed to run in linear time for that specific overload. This is a good example of how much people care about the correct interface, and you should too.

/* Iterators */

iterator begin();

const_iterator begin() const;

const_iterator cbegin() const;

iterator end();

const_iterator end() const;

const_iterator cend() const;

reverse_iterator rbegin();

const_reverse_iterator rbegin() const;

const_reverse_iterator crbegin() const;

reverse_iterator rend();

const_reverse_iterator rend() const;

const_reverse_iterator crend() const;The rest of the public functions are simple and boring, get_allocator and some comparison operators.

After defining the interface, it’s time to implement these functions. Be careful, don’t hesitate to write proper unit and coverage tests.

We’re almost there. Before getting to the last serious part, implementing proper iterators, a little hint: derive your container implementation from your allocator type, as the STL ones do. It’s ok, because there should be one allocator instance for each container, and also, by deriving from it, we can benefit from the EBO (empty base optimization), so that if the allocator is a class with no members (like in most cases), it won’t increase the size of the container object.

Back to the iterators. Try to answer this famous interview question: what’s the difference of std::vector<int>::const_iterator and const std::vector<int>::iterator types? It’s important to us, because if they are the same, we can implement only the iterator and just “typedef” the const_iterator. But if they are different types, we will need to write two separate classes. The right answer is: they are different types. The first one is const_iterator, meaning that an instance of it cannot be used to change the element it refers to. The second one is const iterator, meaning that it can change the element it refers to, but it cannot refer to another element. And of course reverse_iterator-s are also different types. The important things to remember about these iterators are:

const_iterator must have a constructor from iterator

reverse_iterator-s must have base member functions

Hopefully, there is an adaptor std::reverse_iterator class in STL which wraps the iterator to become a reverse_iteartor. So no need to write a whole new class, just make sure your iterator is at least of bidirectional category and “typedef” it as in lines 14 and 15 in public type definitions.

The rest is pretty much the same. Let’s start writing then. Rather than writing the iterator inside the container I’ve written it somewhere outside, and just “typedef”-ed it in the container.

template <typename StringT, typename CharT, typename MappedT>

class prefix_tree_iterator

{

private:

using key_type = StringT;

using mapped_type = MappedT;

using self = prefix_tree_iterator<key_type, CharT,

mapped_type>;

public:

using iterator_category = std::bidirectional_iterator_tag;

using value_type = std::pair<const key_type,

mapped_type>;

using difference_type = std::ptrdiff_t;

using pointer = value_type*;

using reference = value_type&;Only five of these definitions are important, the public ones. It is very important to define this types correctly, especially the iterator_category. It should be defined as one of these 5 types:

input_iterator_tag

output_iterator_tag

forward_iterator_tag

bidirectional_iterator_tag

random_access_iterator_tag

These types are defined in std namespace in <iterator> header. This type definition helps the STL algorithms to decide which implementation to use. This is what makes std::distance and std::advance run faster for std::vector's iterators than for the ones of std::list. But how do you know which category your iterator is of.

It depends on what kind of interface your iterator provides. Basically, an iterator must have the operator*() and operator->() operators defined. If it can also have the operator++, it is of forward category. If it also has the operator--, it is of bidirectional category. If it also has the operator+, operator-, operator+=, operator-=, operator[], operator< and other comparison operators (>, ≤, ≥), it is of random_access category. In reality the rules are a bit more strict.

That’s pretty much it. Don’t forget to properly unit test it in the end.

Source: Medium

The Tech Platform

Comments